Tensorflow_classification

Model Classify

from sklearn.preprocessing import LabelEncoder, StandardScaler

from sklearn import metrics

import logging

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import seaborn as sns

import pandas as pd

import time

from hyperopt import fmin, tpe, hp, Trials

Stop the bitching

import warnings, os

warnings.filterwarnings('ignore')

os.environ['TF_CPP_MIN_LOG_LEVEL']='2'

import tensorflow as tf

tf.logging.set_verbosity(tf.logging.ERROR)

Define a method to display the results

def Get_Model_Data():

model = pd.read_csv('./model.csv')

print(model.head())

# Split the data into Train/Validation/Test with Train having 60% and test and validation 20% each

train, valid, test = np.split(model.sample(frac=1), [int(.6*len(model)), int(.8*len(model))])

features = list(['BoxRatio','Thrust', 'Velocity', 'OnBalRun', 'vwapGain'])

print("features: ", features)

X = train[features].as_matrix()

X = X.astype(np.float64)

standard_scaller = StandardScaler()

X = standard_scaller.fit_transform(X)

label_encoder = LabelEncoder()

label_encoder.fit(train["Altitude"])

y = label_encoder.transform(train["Altitude"])

x_train = train[features].as_matrix()

y_train = train['Altitude']

x_valid = valid[features].as_matrix()

y_valid = valid['Altitude']

x_test = test[features].as_matrix()

y_test = test['Altitude']

df = pd.DataFrame(data=train)

df.append(valid)

df.append(test)

df[features] = X

df['Altitude'] = y

mask = list(['BoxRatio','Thrust', 'Velocity', 'OnBalRun', 'vwapGain', 'Altitude'])

df_new = df[mask]

print(df_new.head())

# plt.ioff()

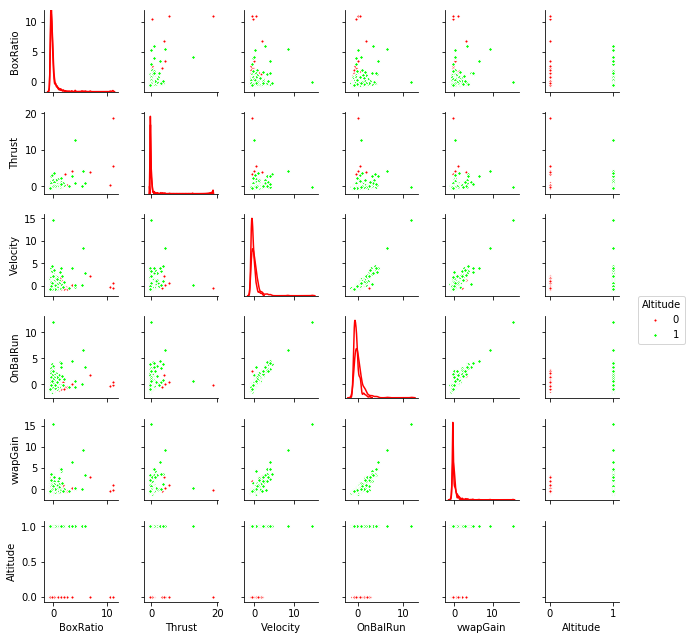

red_green = ["#ff0000", "#00ff00"]

sns.set_palette(red_green)

g = sns.pairplot(df_new,

diag_kind='kde',

hue='Altitude',

markers=["o", "D"],

size=1.5,

aspect=1,

plot_kws={"s": 10})

g.fig.subplots_adjust(right=0.9)

# g.savefig('model_classify.png', bbox_inches='tight')

return x_train, y_train, x_valid, y_valid, x_test, y_test

Define the training method

def Train(x_train, y_train,

x_valid, y_valid,

hidden,

activation,

optimizer,

dropout):

feature_columns = tf.contrib.learn.infer_real_valued_columns_from_input(x_train)

# config = tf.contrib.learn.RunConfig(tf_random_seed=7, save_checkpoints_secs=1)

config = tf.contrib.learn.RunConfig(tf_random_seed=7)

# print('hidden: ', hidden, ', activation: ', activation.__name__, ', dropout: ', dropout, ', optimizer: ', optimizer)

validation_metrics = {

"accuracy":

tf.contrib.learn.MetricSpec(

metric_fn=tf.contrib.metrics.streaming_accuracy,

prediction_key="classes"),

"precision":

tf.contrib.learn.MetricSpec(

metric_fn=tf.contrib.metrics.streaming_precision,

prediction_key="classes"),

"recall":

tf.contrib.learn.MetricSpec(

metric_fn=tf.contrib.metrics.streaming_recall,

prediction_key="classes")

}

validation_monitor = tf.contrib.learn.monitors.ValidationMonitor(

x_valid,

y_valid,

every_n_steps = 50,

early_stopping_metric = "loss",

early_stopping_metric_minimize = True,

early_stopping_rounds = 200,

metrics = validation_metrics)

nn = tf.contrib.learn.DNNClassifier(

feature_columns = feature_columns,

hidden_units = hidden,

activation_fn = activation,

n_classes = 2,

optimizer = optimizer,

config = config,

dropout = dropout

)

logging.getLogger().setLevel(logging.INFO)

nn.fit(

x = x_train,

y = y_train,

max_steps=500,

monitors=[validation_monitor])

ev = nn.evaluate(x=x_test, y=y_test, steps=1)

mse = ev["loss"]

return nn, mse

Define an objective function for the Bayesian Hyperparameter Optimization

class switch(object):

def __init__(self, value):

self.value = value

self.fall = False

def __iter__(self):

"""Return the match method once, then stop"""

yield self.match

raise StopIteration

def match(self, *args):

"""Indicate whether or not to enter a case suite"""

if self.fall or not args:

return True

elif self.value in args: # changed for v1.5, see below

self.fall = True

return True

else:

return False

def objective(params):

# print(' ')

for name, value in params.items():

for case in switch(name):

if case('hidden'):

hid = value

# print('hidden: ', hid);

break;

if case('activation'):

act = value

# print('activation: ', act.__name__);

break;

if case('optimizer'):

opt = value

# print('optimizer: ', opt);

break;

nn, mse = Train(x_train, y_train,

x_valid, y_valid,

hidden = hid,

activation = act,

optimizer = opt,

dropout = 0.2)

global best_nn, best_mse, loop, max_loop

loop = loop + 1

if mse < best_mse:

best_mse = mse

best_nn = nn

print(loop,'hid:',hid,'act:',act.__name__,'opt:',opt,'best mse:',best_mse)

return mse

Get the training, validation, and testing datasets, and display a pairs plot

x_train, y_train, x_valid, y_valid, x_test, y_test = Get_Model_Data()

Date Symbol BuyIndex SellIndex BoxRatio Thrust Acceleration \

0 4/20/2017 NUE 132 214 1.232 1.064 28.436

1 5/9/2017 TDOC 132 294 0.496 0.780 69.170

2 11/3/2016 DYN 155 250 0.505 -0.573 95.105

3 7/27/2017 VZ 132 249 1.149 1.055 38.779

4 1/24/2017 VRAY 132 190 3.101 4.414 8.504

Velocity nPosVelo OnBalRun vwapGain Gain Altitude

0 1.107 33 3.360 0.782 0.8069 0

1 2.877 13 8.656 1.352 6.4000 1

2 2.258 22 4.547 0.505 2.2078 1

3 1.525 54 3.882 1.924 1.7749 1

4 1.577 59 4.017 0.826 1.3645 0

features: ['BoxRatio', 'Thrust', 'Velocity', 'OnBalRun', 'vwapGain']

BoxRatio Thrust Velocity OnBalRun vwapGain Altitude

5 -0.092818 0.444166 0.299739 0.739438 0.344576 0

767 -0.560196 -0.360944 0.067868 0.356868 -0.064465 1

234 -0.383533 -0.162137 1.652861 2.842184 2.125477 1

902 -0.510574 -0.342134 -0.591577 -0.549462 -0.217069 1

537 0.957027 0.013933 0.300385 0.568961 0.119079 1

Define the hyper-parameters

hidden_list = list([[40],[80],[100],[200],[300],[400],[500],

[80,40],[100,50],[200,100],[300,150],[400,200], [500,250],

[80,70,60,50],

[100,80,60,40],

[200,150,100,50],

[300,250,200,150],

[80,70,60,50,40],

[100,80,60,40,20],

[200,180,160,140,120],

[300,250,200,150,100],

[400,350,300,250,200],

[500,400,300,200,100],

[80,70,60,50,40,30],

[100,90,80,70,60,50],

[200,180,160,150,140,130],

[300,250,200,150,100,50],

[400,350,300,250,200,150],

[500,400,300,200,100,50]])

activation_list = [tf.sigmoid, tf.tanh, tf.nn.elu, tf.nn.softplus, tf.nn.softsign]

optimizer_list = ['SGD', 'Adam', 'Adagrad', 'RMSProp']

best_mse = 100000.0

loop = 0

max_loop = 500

start_time = int(time.time())

space = {

'hidden': hp.choice('hidden', hidden_list),

'activation': hp.choice('activation', activation_list),

'optimizer': hp.choice('optimizer', optimizer_list)

}

Now run the Bayesian search

trials = Trials()

Compute the metrics and display the results

best = fmin(objective, space, algo=tpe.suggest, max_evals=max_loop)

best_hidden = hidden_list[best['hidden']]

best_activation = activation_list[best['activation']].__name__

best_optimizer = optimizer_list[best['optimizer']]

ev = best_nn.evaluate(x=x_test, y=y_test, steps=1)

mse = ev["loss"]

if not best_mse == mse: raise AssertionError

print('\nbest model:',

'\n hidden........ ', best_hidden,

'\n activation.... ', best_activation,

'\n optimizer..... ', best_optimizer,

'\n best mse...... ', best_mse)

predictions = list(best_nn.predict(x_test, as_iterable=True))

print(metrics.classification_report(y_true=y_test, y_pred=predictions))

cm = metrics.confusion_matrix(y_true=y_test, y_pred=predictions)

print('confusion matrix\n',cm)

true_negative = cm[0,0]

true_positive = cm[1,1]

false_negative = cm[1,0]

false_positive = cm[0,1]

total = true_negative + true_positive + false_negative + false_positive

accuracy = (true_positive + true_negative)/total

precision = (true_positive)/(true_positive + false_positive)

recall = (true_positive)/(true_positive + false_negative)

misclassification_rate = (false_positive + false_negative)/total

F1 = (2*true_positive)/(2*true_positive + false_positive + false_negative)

print('accuracy.................', accuracy)

print('precision................', precision)

print('recall...................', recall)

print('misclassification rate...', misclassification_rate)

print('F1.......................', F1)

print('Calculate the metrics from the confusion matrix:')

y_true = np.asarray(y_test)

y_pred = np.asarray(predictions)

# if not accuracy == metrics.accuracy_score(y_true, y_pred): raise AssertionError

# if not precision == metrics.precision_score(y_true, y_pred): raise AssertionError

# if not recall == metrics.recall_score(y_true, y_pred): raise AssertionError

# if not F1 == metrics.f1_score(y_true, y_pred): raise AssertionError

print('logloss..................', tf.losses.log_loss(predictions = y_pred, labels = y_true))

print('logloss..................', metrics.logloss_score(y_true, y_pred))

print('accuracy.................', metrics.accuracy_score(y_true, y_pred))

print('precision................', metrics.precision_score(y_true, y_pred))

print('recall...................', metrics.recall_score(y_true, y_pred))

print('F1.......................', metrics.f1_score(y_true, y_pred))

stream_auc = tf.contrib.metrics.streaming_auc(y_pred, y_true)

sess = tf.Session()

sess.run(tf.initialize_all_variables())

sess.run(tf.initialize_local_variables()) # try commenting this line and you'll get the error

train_auc = sess.run(stream_auc)

print('auc......................', train_auc[1])

end_time = int(time.time())

d = divmod(end_time - start_time,86400) # days

h = divmod(d[1],3600) # hours

m = divmod(h[1],60) # minutes

s = m[1] # seconds

print('%d days, %d hours, %d minutes, %d seconds' % (d[0],h[0],m[0],s))

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-9-18a5ed93ad65> in <module>()

----> 1 best = fmin(objective, space, algo=tpe.suggest, max_evals=max_loop)

2

3 best_hidden = hidden_list[best['hidden']]

4 best_activation = activation_list[best['activation']].__name__

5 best_optimizer = optimizer_list[best['optimizer']]

~\Anaconda3\lib\site-packages\hyperopt\fmin.py in fmin(fn, space, algo, max_evals, trials, rstate, allow_trials_fmin, pass_expr_memo_ctrl, catch_eval_exceptions, verbose, return_argmin)

312

313 domain = base.Domain(fn, space,

--> 314 pass_expr_memo_ctrl=pass_expr_memo_ctrl)

315

316 rval = FMinIter(algo, domain, trials, max_evals=max_evals,

~\Anaconda3\lib\site-packages\hyperopt\base.py in __init__(self, fn, expr, workdir, pass_expr_memo_ctrl, name, loss_target)

784 before = pyll.dfs(self.expr)

785 # -- raises exception if expr contains cycles

--> 786 pyll.toposort(self.expr)

787 vh = self.vh = VectorizeHelper(self.expr, self.s_new_ids)

788 # -- raises exception if v_expr contains cycles

~\Anaconda3\lib\site-packages\hyperopt\pyll\base.py in toposort(expr)

713 G.add_edges_from([(n_in, node) for n_in in node.inputs()])

714 order = nx.topological_sort(G)

--> 715 assert order[-1] == expr

716 return order

717

TypeError: 'generator' object is not subscriptable